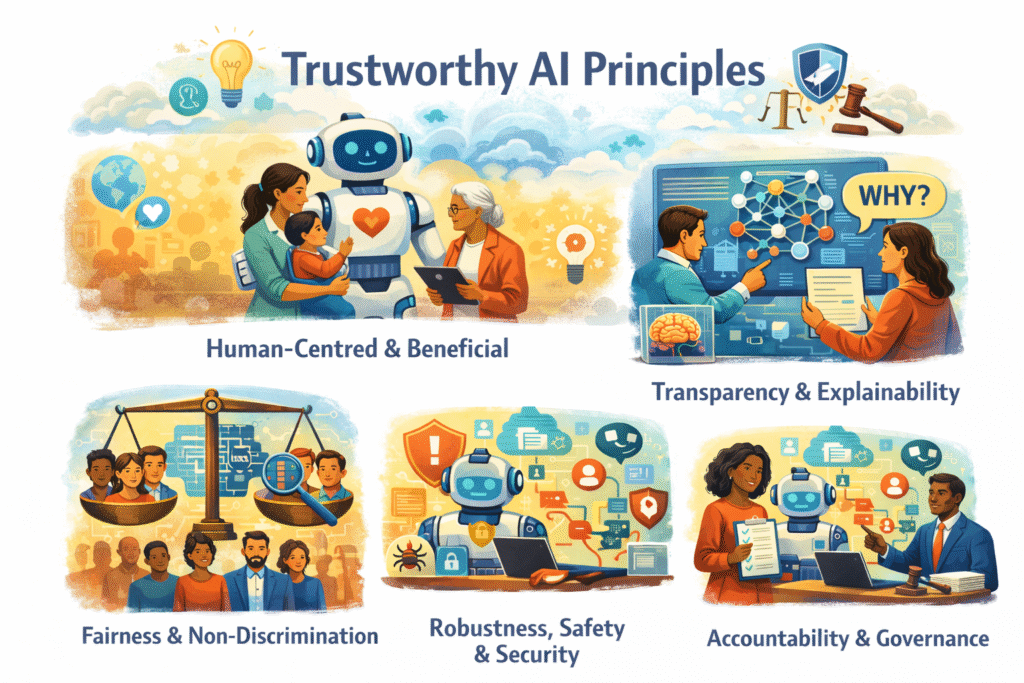

Executive Summary

Trustworthy AI means designing, developing, deploying, and using AI systems in ways that are human-centred, fair, transparent, safe, secure, and accountable. These principles reflect international consensus, including the OECD AI Principles, and are widely aligned with UK and EU regulatory approaches.

AI should enhance human wellbeing and dignity, supporting people rather than replacing meaningful human judgement.

Key points

- Respect human rights and democratic values

- Maintain meaningful human oversight

- Focus on clear social benefit

AI systems should avoid unjustified bias and discrimination, both in design and real-world impact.

Key points:

- Identify and mitigate bias in data and models

- Test impacts across diverse groups

- Design for inclusion and accessibility

People should understand when AI is used and have appropriate insight into how decisions or outputs are produced.

Key points

- Clearly signal AI use

- Provide explanations proportionate to risk

- Document purpose, limits, and assumptions

AI should perform reliably under normal and adverse conditions and be resilient to misuse or attack.

Key points

- Rigorous testing before and after deployment

- Ongoing monitoring for drift and failure

- Strong security and misuse protections

Responsibility for AI outcomes rests with organisations and people, not with the technology itself.

Key points

- Clear ownership and decision accountability

- Audit trails and documentation

- Mechanisms for challenge, oversight, and redress

Trustworthiness Checklist

□ Is the AI’s purpose clearly beneficial and justified?

□ Are fairness and bias risks identified and mitigated?

□ Can affected people understand and challenge outcomes?

□ Is the system robust, safe, and secure over time?

□ Are accountability and governance clearly assigned?

Risks and Mitigations

- Over-reliance on automation → Human-in-the-loop controls

- Hidden bias or exclusion → Impact assessments and diverse data

- Loss of trust due to opacity → Clear communication and explainability

Governance Takeaway

Trustworthy AI is not a one-off technical task. It requires continuous organisational commitment, leadership accountability, embedded risk management, and regular review across the AI lifecycle.